It’s not as fast as it used to be when I bought it!

One of the biggest computer annoyances is when your machine gets slow over time.

This is in two parts, firstly the reasons it’s slow and then what can you do about it?

When you first get a new computer and boot it up it works lightning fast. That’s because it doesn’t have anything on it and its the fastest its ever going to be. Then it starts slowing down this can start to happen years after you get a PC, but sometimes it happens in just a few short months.

Some computers are never going to stay fast, its the way they are designed and usually reflect the price you paid. The cheaper the machine the slower all the internal bits.

Now just a small technical bit, every computer has an FSB speed, you wont see it in the headline specs as only techies are interested. It’s the common denominator, its the speed that everything works at, forget processor speed this is the important one. If you have a slow FSB then you have a machine that will quickly start to slow down.

Now the other reasons, You update the computer with your data, you install software and the operating system gets updates, best of all it will slow down with age. Typically a five year old computer will be running around 20% slower due to the ageing of the components.

So there isn’t one single reason that pinpoints why this happens. Regardless of whether you have a PC or Mac, over time as you download files, install software, and surf the Internet, your computer gets bloated with files that hog system resources.

We have to face the fact that as time goes on, our computers will get slow. It’s a natural progression. The Internet and software capabilities evolve by the minute. These new innovations require more power and space to keep up with the pace. Sometimes it might not even be your fault that your once zippy computer is now crawling but its just a sign of the times.

In addition, there are many other things that contribute to a slowdown, these are

HD

As your hard disk fills up it takes longer to store and retrieve data. This is because of the design of the hard disk and its quality but its also because of the way data is stored. If you have a spinning hard drive, once they get older they simply start to slow down as they reach the end of life. Lower cost drives store and retrieve the information more slowly.

It’s important to note that all spinning hard drives will die eventually, it could be tomorrow but it could also happen 10 years from now. It’s just the nature of their design.

A hard disk doesn’t store data in a sequential order, it puts it where it can and then when you want it the computer looks at where all the bits are stored and reassembles it. The file that hold this is known as the FAT – File Allocation Table.

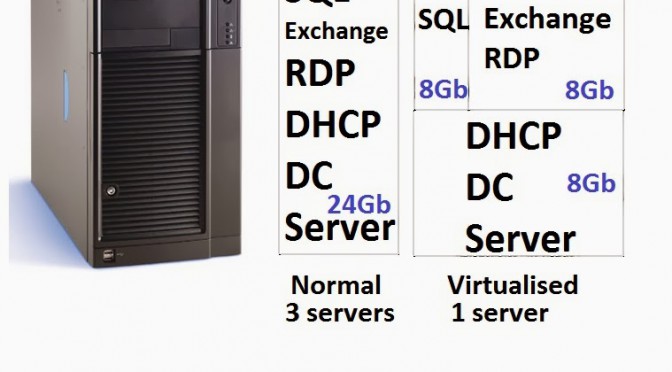

RAM

All programs are loaded in to an area to be worked on called the RAM, these come at varying speeds too but the more programs you have the more RAM you will use. If there isn’t enough the computer starts moving things around and uses the hard disk as extra space, this is really slow when this starts happening.

TSR, Leftover, prefetch, resmon

Some programs load, run and then finish, but these still hog some RAM, these are known as Terminate but Stay Resident programs. Prefetch is another area where Windows remembers what you have used, it holds this in an area called PREFETCH. If you want to see what’s going on just go to start and type in RESMON.

Malware Spyware Virii, Netbots

These are programs that someone else wants to run on your computer. This would be an article on it’s own but these are all the “nastiness” that slow down computers.

Anti-virus packages

Many programs come with an anti-virus package but people forget to remove the old one before the new one is installed. It’s like leaving your old car in your garage and then trying to drive your new car into the same place, not ideal.

Plug ins

Everyone wants you to install their toolbar or addin, don’t, and if you have then remove them. All these plug ins take up RAM and some of them divert your Internet searches so that other people can see what you are looking for and may copy your credit card details when you buy something online.

BloatWare

This is often the sort of free software that will sort out your registry and clean up everything, if its free its probably malware and how do you speed things up by adding more? It’s all unnecessary software. This will fill up your hard drive and RAM, causing you to run out of space at the price of speed.

Cookies

Cookies are generally good but like everything you can have too many. A cookie is a small piece of data that a web site you are visiting downloads to your machine.

Data/os corruption

A corruption is simple, its when there is extra, missing or jumbled words. “the quoik briwn FFox umps ova the lzay dgo” You know what I said but it took longer to read! Software and hard drive corruption are two reasons why your computer may slow down over time.

Corruption can be caused by a host of things but it’s mostly bugs in the operating system, corrupted RAM data, static electricity (from carpet or other fabrics), power surges, failing hardware, and normal decomposition with age.

Windows update missing & old drivers

Adding in updates makes Windows bigger but not making them could add to security problems. If you add new devices or throw some away you could have old or unused drivers.

Overheating

If you computer sits on the floor it is taking in cooling air with dust and fluff, this builds up and stops the air circulating properly. If your computer has an Intel processor then it will gradually slow down as it warms up. If it is an AMD then its just a fire hazard. AMD are used in low cost hardware.

Part 2 What can I do?

You can always wipe it and start again but assuming that’s not practical lets go through a step by step clean down. It will involve loading programs and going through lists.

First backup your computer. Don’t do anything unless you have, if you want to risk it, just stop and think that’s it has vanished into thin air and you have to start from scratch, now make a backup.

Step 1: Check for Malware – most anti-virus programs will try to protect you from getting a virus. But MalwareBytes is the most effective software for getting rid of them once you have them. For a belt and braces approach, I would recommend starting Windows in safe mode, then run MalwareBytes. To do this, switch on or restart your computer, then keep pressing F8 – this will then give you a list of options – choose Safe Mode with Networking. Then run MalwareBytes and restart your computer once its finished.

Step 2: Load and run Hijackthis. Once you have run this it will display a list in notepad. copy this using Ctrl-C and go to www.hijackthis.de click on the empty area and press Ctrl-V, then click on analyze. Have a look down the list, any red crosses “nasty” then call us as Malware bytes has missed something.

Step 3: Run an online virus check such as Trust HouseCall or Eset. Go to Google and type Housecall in the search box, make sure its a Trendmicro web site and then follow the instructions. For ESET go to http://www.eset.co.uk/Antivirus-Utilities/Online-Scanner and follow the instructions.

Step 4: If you are running anything apart from ESET anti virus them remove it and ask us about a trial ESET licence. if you have Norton or McAfee installed, then get rid of them – they will slow your computer down. Other programs are large and bloated and some don’t work. Only have ONE anti-virus program installed. Having 2 or more anti-virus programs installed will dramatically slow your computer down because they are competing with each other.

Step 5: Go to Start, Control Panel and find programs. Have a good look down the list, some programs you will know and some you will not. Uninstall those you know you don’t need including all the toolbars, especially Ask!, Google toolbars etc.

if you have installed programs they not only take up storage space but also increase the size of the registry. The registry is like an index which is scanned by the computer for program options. The bigger the index, the longer it takes to scan.

Step 6: Reboot your machine.

Step 7: Update windows – ensure your windows is as up to date as possible. This is mostly for security flaws that Microsoft has identified but also bug fixes etc. Windows has a “windows update” option but in my experience, its sometimes not up to date. So check here first http://update.microsoft.com/ – there may also be optional updates, for example the latest versions of Internet Explorer or Windows Media Player. They are optional but I would recommend installing these anyway.

Step 8: Delete temporary files, fix the registry, stop start-up programs – this might be a bit techy though, if you don’t know what a program does then have a Google for it – start up programs run when your computer starts and can take up valuable memory. Really this is best done by a technician.

Step 9: Defragment your hard drive. Imagine a cassette tape which your favourite songs. Now imagine you delete a couple of songs and want to add a new song – there isn’t enough room for the song in either of the deleted spaces but it can be split across them. Eventually, after deleting and adding new songs, the songs are all over the tape. This is called fragmentation. The hard disk in your computer works in the same way. But we can use a program to move the files around to make them more efficient – this is defragmentation!

If your computer is still slow after all the above, then you might need to increase the memory. Unfortunately there are many different types of memory.

If you want us to do all this then it will take between one to two hours, but we might just make your machine work for another year or two.